Generative AI has taken the world by storm since the introduction of ChatGPT in November 2022. Many organizations are already using GenAI or are considering adopting it. Low-Ops has not stood still either. We introduced a PoC assistant in 2024 that enables you to analyze app logs effectively. Today, we’re sharing our vision for integrating GenAI with Low-Ops.

Use Cases

We see tremendous opportunities in applying GenAI to Low-Ops. Our strategy focuses on two main use cases:

- Architectural definitions that guide coding agents to build Low-Ops compatible code

- Intelligent assistance to lower operational efforts and streamline workflows

Architectural Definitions for AI Agents

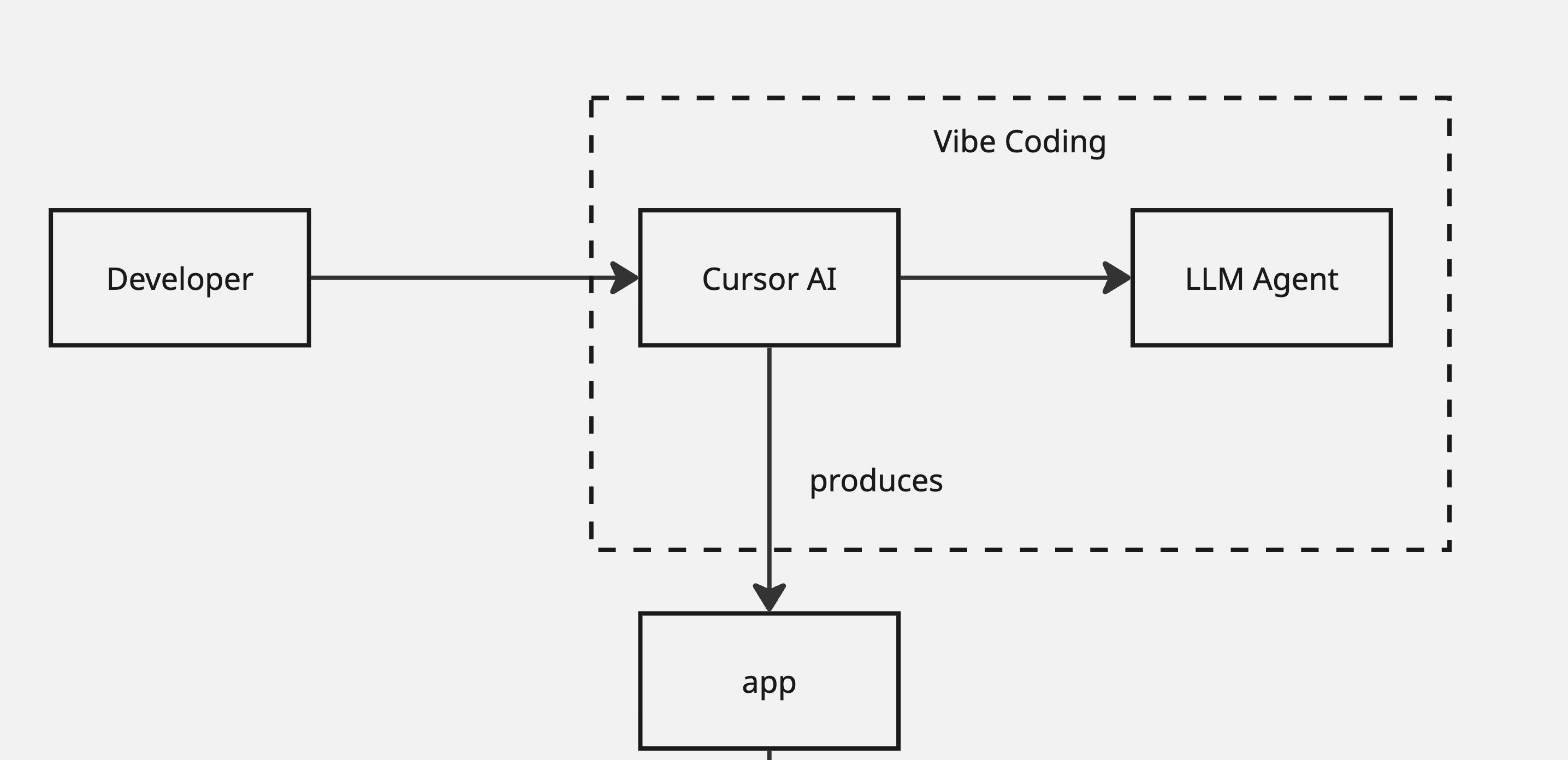

You can deploy any web app in Low-Ops, but your codebase must adhere to certain preconditions. With AI coding agents like Cursor, GitHub Copilot, or Windsurf, it’s possible to instruct the agent to ensure specific requirements are always met.

Examples of architectural constraints include:

- Dockerfile standards - Proper multi-stage builds and base image requirements

- Health checks - The app root must always return a 2xx status for health monitoring

- Environment variables - Respect configuration like port binding, timeouts, and feature flags

- Service consumption - How to connect to databases, object storage, and message queues

- Observability - How to expose metrics in Prometheus format and structured logging

These “agreements” ensure that apps can seamlessly consume services provided by Low-Ops while maintaining operational best practices.

With these definitions in place, a developer can simply prompt their coding agent with requests like:

Implement a file uploading form and persist files to object storage

The agent would automatically know that an S3-compatible object storage service is present and how to consume the credentials at runtime using the Low-Ops conventions.

Intelligent Assistance

Many organizations start their AI journey with a chat model. Our PoC proved that chat interfaces are not optimal in this context. The spirit of Low-Ops is to reduce operations, not add more steps. Requiring users to input text as the primary interface feels awkward and counterproductive.

Instead, we believe the platform itself must be inherently intelligent. We’re achieving this by embedding GenAI capabilities directly into strategic workflows. Examples include:

-

Smart alerts - When you receive an alert about your app, you get access to root cause analysis with clear remediation actions. For instance, if your app crashed, the system would analyze the logs and suggest increasing memory limits if the app ran out of memory, or identify specific code paths causing the issue.

-

Performance optimization - Experiencing slow performance? The system combines distributed tracing data with your codebase analysis to pinpoint exactly where performance bottlenecks are occurring, complete with optimization recommendations.

All of this is possible with Low-Ops because it’s a fully integrated platform. Unlike cobbled-together tool chains, Low-Ops interconnects services to provide the richest possible context for GenAI to analyze and advise—with you firmly in control.

Roadmap

Q4 2025: Low-Ops app templates will be updated to be AI-aware, implementing architectural definitions to assist with “vibe coding”—where you describe what you want and AI agents handle the implementation details while following Low-Ops best practices.

Q1 2026: We’re launching Low-Ops AI in private beta with smart alerts and intelligent root cause analysis. Deeper integrations will be added gradually based on user feedback and real-world usage patterns.

Want early access? Subscribe to our newsletter to stay informed and get access to the private beta.